Creating Tap Applications Using Machine Learning

One of the most exciting parts of releasing the Tap SDKs to the public is seeing what our tappers come up with. We’ve met with some of the most creative developers and hackers, who are doing everything from controlling robots to activating fireworks.

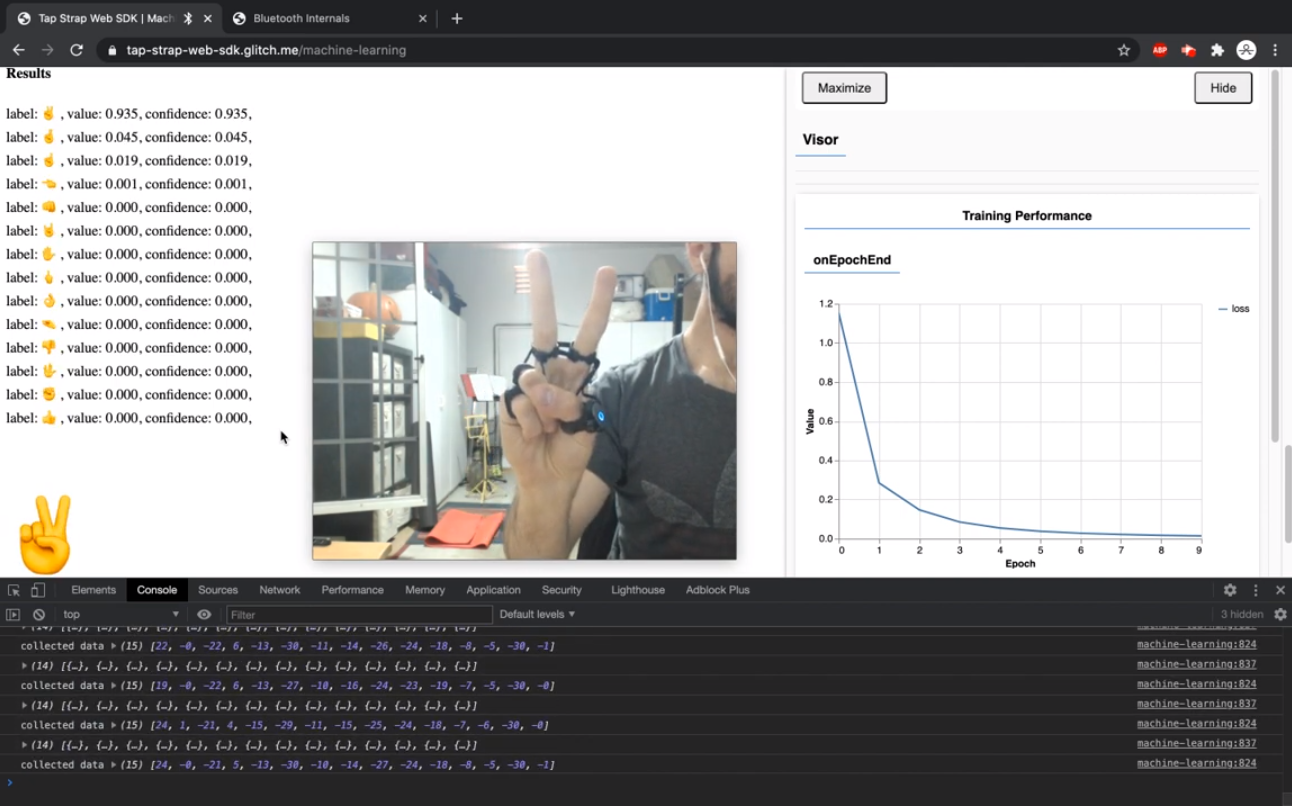

Recently we had a chance to speak with a Creative Technologist, Zack Qattan, who built a smart Machine Learning web app using our dev kit and raw sensor data. His web app helps register and visualize your Tap’s behavior as well as act as a Machine Learning Kit which you can use to create custom models.

In this video, you can watch Zack demonstrating how his web app can be used to interpret hand gestures to hand emojis:

The web app interprets raw sensor signals received from Tap’s accelerometer and gyroscope which can be mapped to identify hand gestures, optical mouse movement, tapping and even allows you to create custom haptics. This means it can begin to recognize the positioning of your hand so you can apply relevant input to specific gestures.

For example, Zack used his Machine Learning app to recognize hand gestures as ASL letters:

On the left you will see a readout of the real time sensor data based on the position of the hand being displayed in the video. Below the sensor data, the app will output an appropriate ASL letter.

This is not only exciting for assistive technology purposes, but for hand tracking as a whole. Current hand tracking solutions (Oculus, Leap Motion, Hololens, etc) require the use of cameras to visually register positioning – limiting their use to a situation where your hand must remain visible to the camera, and in lit conditions.

Unlike other hand tracking methods, Tap does not require a visual camera feed to track positioning which allows input to be registered anywhere and in any conditions.

Another example Zack demonstrates is translating hand gestures into binary numbers:

The applications for these technologies provide limitless options for gaming, assistive tech, remote control, and input into AR/VR systems. How would you use this smart technology?

Try Zack’s web Kit Here: https://tap-strap-web-sdk.glitch.me/

Download our SDKs: https://github.com/tapwithus